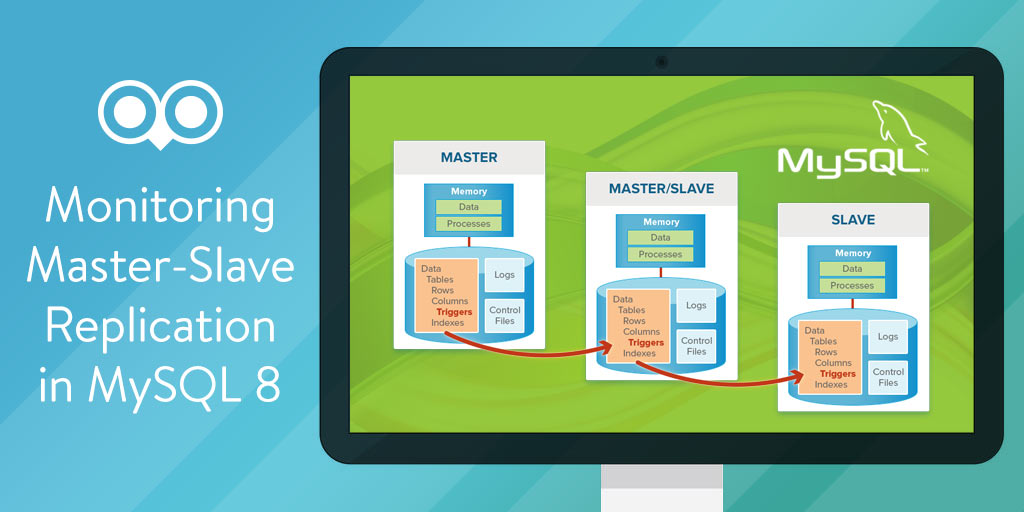

MySQL 8 introduced a number of enhancements to improve both replication performance and the monitoring thereof. Improvements included more efficient replication of small updates on big JSON documents, the addition of Performance Schema tables for slave performance metrics, tracing and debug logging improvements, among others. With regards to monitoring, Group Replication has been improved by introducing new replication timestamps, additional columns to the performance schema, as well as by making the relationship between replication threads more efficient. We covered the various improvements to replication performance in the Replication Performance Enhancements in MySQL 8 blog. Today’s blog will provide some practical guidelines on monitoring your MySQL 8 master-slave and group (cluster) replication.

Two Types of MySQL Replication

As mentioned in previous blogs, MySQL supports two types of replication: statement-based and row-based:

- In statement-based logging, the master writes SQL statements to the binary log. Replication of the master to the slave works by executing the logged SQL statements on the slave. Abbreviated to SBR, statement-based replication corresponds to the MySQL statement-based binary logging format.

- In row-based logging, the master writes events to the binary log that indicate how individual table rows are changed. Replication of the master to the slave works by copying the events representing the changes to the table rows to the slave. This is called row-based replication, which can be abbreviated as RBR.

- You can also configure MySQL to use a mix of both statement-based and row-based logging, depending on which is most appropriate for the change to be logged. This is called mixed-format logging. When using mixed-format logging, a statement-based log is used by default. Depending on certain statements, and also the storage engine being used, the log is automatically switched to row-based in particular cases. Replication using the mixed format is referred to as mixed-based replication or mixed-format replication.

How you go about tracking replication progress will largely depend on the logging and replication types employed.

General Replication Monitoring

In the Replication Performance Enhancements in MySQL 8 blog, we learned that new replication timestamps were added to the information provided by the SHOW SLAVE STATUS. It provides status information on essential parameters of the slave threads and is useful in ensuring that replication is occurring and that there were no errors between the slave and the master.

It provides a few key pieces of information, such as:

- Slave_IO_Running tells us if the Slave is able to connect to the Master.

- Slave_SQL_Running indicates if data received from the Master is being processed.

- Seconds_Behind_Master shows difference between last timestamp read in the binlogs and current time. This is important to understand. It does not report directly the delay between when information is updated/inserted on the master and recorded on the slave. A slow network can cause an artificially inflated number, as well as long running queries or blocking/locking operations. If it lags behind the Master by any appreciable level, it’s usually a clear sign that replication is not working properly.

- Last_IO_Errno, Last_IO_Error, Last_SQL_Errno, Last_SQL_Error, are all pretty much what they say, the last error number and error from the IO or SQL threads

Here is some typical SHOW SLAVE STATUS output with the above fields highlighted. The “\G” statement terminator is utilized instead of a semi-colon in order to produce a more readable vertical layout:

mysql> SHOW SLAVE STATUS\G

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: stg04-cf.copperfroghosting.net

Master_User: root

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-bin.000006

Read_Master_Log_Pos: 106

Relay_Log_File: stg06-cf-relay-bin.000002

Relay_Log_Pos: 251

Relay_Master_Log_File: mysql-bin.000006

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 106

Relay_Log_Space: 409

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

1 row in set (0.00 sec)

Using Performance Schema Replication Tables

Being such an integral process, DBAs frequently want to know how replication is proceeding. Traditionally, the health of MySQL replication was monitored using the SHOW SLAVE STATUS command. Eventually, this static command was no longer able to live up to people’s expectations. This is because SHOW SLAVE STATUS does not scale well in the sense that there are multiple parts of the slave server:

- the receiver

- the applier

- intermediate queues, etc

Once transactions are received by a replication channel’s receiver (I/O) thread, they are added to the channel’s relay log file and passed through to an applier thread.

In some cases, there are multiple instances of each part.

SHOW SLAVE STATUS now produces 54 fields, and interleaves a lot of different information together. As of MySQL 5.7, it had reached such a point where an SQL interface that allowed users to query exactly what is required from the replication status was in order. So, the MySQL team decided to add tables to monitor replication to the performance_schema database.

The benefits provided by the new tables include:

- Easier to access exactly what is required, through an SQL interface.

- Pieces of data can be assigned to variables and thus used in stored procedures etc.

- Easier testing with SELECT item from Performance Schema tables.

- Logically unrelated information is split into different tables.

- Cross-reference monitoring data seamlessly by joining with other Performance Schema tables, Information_Schema tables etc.

- Easier to extend.

- More flexibility to accommodate a lot of replication information but still be organized and easy to use.

Six tables were created to deliver the above benefits:

- replication_connection_configuration

- replication_connection_status

- replication_execute_configuration

- replication_execute_status: All replication applier module status information of the replication_execute_status table is further split based on overall stats: coordinator’s status or worker’s status. That gives us two more tables:

- replication_execute_status_by_coordinator

- replication_execute_status_by_worker

There are also 8 new raw and summary instrument TRANSACTIONS tables:

- events_transactions_current

- events_transactions_history

- events_transactions_history_long

- events_transactions_summary_by_account_by_event_name

- events_transactions_summary_by_host_by_event_name

- events_transactions_summary_by_thread_by_event_name

- events_transactions_summary_by_user_by_event_name

- events_transactions_summary_global_by_event_name

Developers familiar with the Performance Schema interface can even extend the replication tables to provide additional information by adding rows to the tables.

For a request read as a statement from the relay log on a replication slave:

- Statements in the relay log are stored and are read as text. There is no network protocol, so the statement/abstract/new_packet instrument is not used. Instead, the initial instrument is statement/abstract/relay_log.

- When the statement is parsed, the exact statement type is known. For example, if the request is an INSERT statement, the Performance Schema refines the instrument name from statement/abstract/Query to statement/sql/insert, which becomes the final name.

An Example

When the SQL thread has executed all events from the relay log, it enters MYSQL_BIN_LOG::wait_for_update_relay_log(), which then waits for the “update_cond” condition to be raised. At this point in time, the slave goes idle. Ever since MySQL v5.5, we can track conditions using the Performance Schema. Within the Performance Schema, conditions get timed from the time they were waiting for the condition to be raised by another thread, in other words, the total time that the SQL thread was idle.

The condition waited for within wait_for_update_relay_log() maps to the wait/synch/cond/sql/MYSQL_RELAY_LOG::update_cond event within the Performance Schema. The following code snippet shows a snapshot from the MySQL server that is in the middle of a replication tree ( both the slave and master reside on the same box, so both the relay logs and binary logs are enabled ):

mysql> select * from events_waits_summary_global_by_event_name order by sum_timer_wait desc limit 5; +------------------------------------------------------------+------------+--------------------+----------------+----------------+----------------+ | EVENT_NAME | COUNT_STAR | SUM_TIMER_WAIT | MIN_TIMER_WAIT | AVG_TIMER_WAIT | MAX_TIMER_WAIT | +------------------------------------------------------------+------------+--------------------+----------------+----------------+----------------+ | wait/synch/cond/sql/MYSQL_RELAY_LOG::update_cond | 595360 | 677877417470900396 | 15021936 | 1138128892553 | 20142735786288 | | wait/synch/cond/sql/MYSQL_BIN_LOG::update_cond | 60466 | 167695654597814052 | 187124608 | 2773387450762 | 84468739641228 | | wait/io/file/myisam/kfile | 3237163 | 844640545485132 | 696600 | 260919992 | 1122678434952 | | wait/io/file/myisam/dfile | 4156623 | 377275756973940 | 206712 | 90764968 | 2911791051108 | | wait/synch/cond/sql/COND_queue_state | 11 | 323434243065288 | 166075717824 | 29184140278662 | 59224373303796 | +------------------------------------------------------------+------------+--------------------+----------------+----------------+----------------+

The wait/synch/cond/sql/MYSQL_BIN_LOG::update_cond is a similar condition that is used by the IO thread logged in to the master, to signal that new events have been written to the binary log.

Monitoring Row-based Replication

The current progress of the replication thread when using row-based replication is also monitored through Performance Schema instrument stages, enabling you to track the processing of operations and check the amount of work completed as well as the estimated work. When these Performance Schema instrument stages are enabled, the events_stages_current table shows stages for replication threads and their progress.

.

To track progress of all three row-based replication event types (write, update, delete):

Enable the three Performance Schema stages by issuing:

mysql> UPDATE performance_schema.setup_instruments SET ENABLED = ‘YES’

-> WHERE NAME LIKE ‘stage/sql/Applying batch of row changes%’;

Wait for some events to be processed by the replication and then check on its progress by looking at the events_stages_current table. For example to get progress for update events use the following statement:

mysql> SELECT WORK_COMPLETED, WORK_ESTIMATED FROM performance_schema.events_stages_current

-> WHERE EVENT_NAME LIKE ‘stage/sql/Applying batch of row changes (update)’

If binlog_rows_query_log_events is enabled, information about queries is stored in the binary log and is stored in the processlist_info column. Hence, here’s the SELECT statement to see the original query that triggered the event:

mysql> SELECT db, processlist_state, processlist_info FROM performance_schema.threads

-> WHERE processlist_state LIKE ‘stage/sql/Applying batch of row changes%’ AND thread_id = N;

An Example

Here’s an example that shows Replication Applier Status Details:

mysql> select * from performance_schema.replication_applier_status_by_coordinator;

+————–+———–+—————+——————-+——————–+———————-+

| CHANNEL_NAME | THREAD_ID | SERVICE_STATE | LAST_ERROR_NUMBER | LAST_ERROR_MESSAGE | LAST_ERROR_TIMESTAMP |

+————–+———–+—————+——————-+——————–+———————-+

| | 24 | ON | 0 | | 0000-00-00 00:00:00 |

| 56-cluster | 26 | ON | 0 | | 0000-00-00 00:00:00 |

+————–+———–+—————+——————-+——————–+———————-+

mysql> select * from performance_schema.replication_applier_status_by_worker;

+————–+———–+———–+—————+———————–+——————-+——————–+———————-+

| CHANNEL_NAME | WORKER_ID | THREAD_ID | SERVICE_STATE | LAST_SEEN_TRANSACTION | LAST_ERROR_NUMBER | LAST_ERROR_MESSAGE | LAST_ERROR_TIMESTAMP |

+————–+———–+———–+—————+———————–+——————-+——————–+———————-+

| | 1 | 27 | ON | <GTID SET> | 0 | | 0000-00-00 00:00:00 |

| | 2 | 29 | ON | <GTID SET> | 0 | | 0000-00-00 00:00:00 |

| | 3 | 31 | ON | <GTID SET> | 0 | | 0000-00-00 00:00:00 |

| | 4 | 33 | ON | <GTID SET> | 0 | | 0000-00-00 00:00:00 |

| 56-cluster | 1 | 28 | ON | <GTID SET> | 0 | | 0000-00-00 00:00:00 |

| 56-cluster | 2 | 30 | ON | <GTID SET> | 0 | | 0000-00-00 00:00:00 |

| 56-cluster | 3 | 32 | ON | <GTID SET> | 0 | | 0000-00-00 00:00:00 |

| 56-cluster | 4 | 34 | ON | <GTID SET> | 0 | | 0000-00-00 00:00:00 |

+————–+———–+———–+—————+———————–+——————-+——————–+———————-+

Conclusion

In today’s blog, we learned about new Performance Schema tables that can be utilized for monitoring MySQL 8 master-slave and group (cluster) replication. We also reviewed some of the key differences between statement-based and row-based logging. In a future blog we’ll see how Monyog makes monitoring replication easier.